DESCRIPTION

The syntactic test, together with the semantic test, belongs to the validation tests, with which the validity

of the input data is tested. This establishes the degree to which the system is proof against invalid, or ‘nonsense’

input that is offered to the system wilfully or otherwise. This test is also used to test the validity of output

data.

Validation tests focus on attributes, which should not be confused with data. An input screen or other random interface

contains attributes that are (to be) filled with input values. If the sections contain valid values, the system will

generally process these and create or change certain data within.

The test basis for the syntactic test consists of the syntactic rules, which specify how a attribute should comply in

order to be accepted as valid input/output by the system. These rules actually describe the value domain for the

relevant attribute. If a value outside this domain is offered for the attribute, the system should discontinue the

processing in a controlled manner – usually with an error message.

Syntactic rules may be established in various documents, but they are normally described in:

-

The ‘data dictionary’ and other data models, in which the characteristics of all the data are described

-

Functional specifications of the relevant function or input screen, containing the specific requirements in respect

of the attributes.

The syntactic rules may take a random order and be tested independently of each other. The coverage type applied here

is Checklist.

Usually, in practice, the input screens of data are used to test the syntactic checks. For practical reasons, this is

often combined with the presentation tests, which test the layout of the screens. Presentation tests can be applied to

both input (screens) and output (lists, reports).

Layout rules may be established in various documents, but are usually described in:

-

Style guides, which often contain guidelines or rules for the whole organisation, concerning matters such as use of

colour, fonts, screen layout, etc.

-

Specifications for the layout of the relevant list or screen. With both the validation test and the presentation

test, checklists are used in which the checks are described that apply to each attribute or screen and that can be

tested.

POINTS TO FOCUS IN THE STEPS

For the SYN, too, in principle the generic steps (see Test Design Techniques) are carried out. However, the construct of a syntactic test is very simple: Each attribute check

and layout check is tested separately. Each check leads to one or more test situations, and each test situation

generally leads to one test case.

For that reason, this section is restricted to the explanation of the first step, “Identifying test situations”. This

will focus on the compiling of the relevant checklists and the organisation of the test based on these.

1 - Identifying test situations

With the syntactic test, two kinds of checks may be applicable:

-

Attribute checks, for the validation test

-

Layout checks, for the presentation test.

An overview is given below of the commonest types of checks in respect of both kinds. These can be used to compile a

checklist that can be applied to every attribute/screen to be tested.

Overview of attribute checks

-

Data type - E.G. numeric, alphabetical, alphanumeric, etc.

-

Field length - The length of the input field is often limited. Investigate what happens when you attempt to exceed

this length. (Press the letter key for a some time.)

-

Input / Output - There are 3 possibilities here:

I: No value is shown, but may be or must be entered

U: The value is shown, but may not be changed

UI: A value is shown, and may be changed.

-

Default - If the attribute is not completed, the system should process the default value. If it concerns a UI field

(see above), the default value should be shown.

-

Mandatory / Non-mandatory - A mandatory attribute may not remain empty. A non-mandatory attribute may remain empty.

In the processing, either the datum is left empty or the default value for this datum is used.

-

Selection mechanism - A choice has to be made from a number of given possibilities. It is important here whether

only one possibility may be chosen or several. This is particularly the case with GUIs (Graphical User Interface),

e.g. with:

-

Radio buttons (try to activate several)

-

Check boxes (try to activate several)

-

Drop-down box (try to change the value or make it empty).

-

Domain - This describes all the valid values for this attribute. It can, in principle, be shown in two ways:

-

Enumeration - For example {M, F, N}.

-

Value range - All the values between the given boundaries are permitted. The value boundaries themselves,

in particular, should be tested. For example, [0,100>, where the symbols indicate that the value range

is from 0 to 100, including the value 0, but excluding the value 100.

-

Special characters - Is the system proof against special characters, such as quotes, exclusive spaces, question

marks, Ctrl characters, etc.?

-

Format - For some attributes, specific requirements are set as regards format, e.g.:

-

Date - Common formats, for example, are YYYYMMDD or DD-MM-YY

-

Postcode - The postcode format in principle varies from country to country. In the Netherlands, the format

for this is “1111 AA” (four digits followed by a space and two letters).

Overview of format checks

-

Headers / Footers - Are the standards being met in this regard? For example, it may be defined that the following

information must be present:

-

Screen name or list name

-

System date or print date

-

Version number

-

Attributes - Per attribute, specific formatting requirements are defined. For example:

-

Name of the attribute

-

Position of the attribute on the screen or overview

-

Reproduction of the attribute, such as font, colour, etc.

-

Other screen objects (optional) - If necessary, such checks as are carried out on “Attributes” can be applied to

other screen objects, such as “push buttons” and “drop-down lists”.

The checklist describes in general terms the type of check that should be carried out on a particular attribute or

screen. The concrete requirements in respect of the attribute or format that are related to this kind of check are

described in detail in the relevant system documentation, such as screen descriptions and the data dictionary.

The syntactic test is adequately specified if there is an overview of:

-

All the attributes and screens that are to be tested

-

All the checks (checklist) that are to be tested in the relevant attribute/screen.

During the test execution, the tester should keep the relevant system documentation to hand for the exact details of

the checks. This method has the added advantage that with changes in the system documentation, the test specifications

do not require to be changed.

An alternative option is to copy the details from the system documentation into the test specifications. The advantage

of this is that there is no need to search for details in the system documentation during the test execution. On the

other hand, it has the disadvantages that it requires disproportionately more work to specify the test and that there

is a risk that the test specifications will be out of step with the system documentation.

If there is a large number of attributes and screens, there is a risk of the number of checks to be carried out

becoming too high. In addition, the severity of the defects that are generally found with the syntactic test is quite

low. It can therefore be useful to restrict the testing effort by prioritising:

-

Determine all the attributes/screens that are to be tested and sort them according to priority

-

Determine all the checks that have to be applied to the attributes/screens and sort them according to priority

-

First, carry out the tests with the highest-priority checks and attributes/screens. Depending on the number and

severity of the found defects, it can be decided whether to continue with lower-priority tests.

Such a mechanism at once offers excellent possibilities for managing the test and for reporting on progress, coverage

and risks. An overview can be shown in a matrix of which checks are to be carried out on which attributes and which

priority they have. Each cell in the matrix represents a test situation to be tested. It can be indicated by a “-” or

shading that the relevant check does not apply to that attribute.

Table 1 provides an example for the syntactic test of the function “Input booking”. The matrix can simply be expanded

for each function/screen for which a syntactic test is required. The priorities are indicated by H(igh), M(edium) and

L(ow). In this example, the priority is determined at function level and all the attributes of the selected function

are tested. However, another option is to allocate different priorities to the attributes within one function. Such a

refinement of course means a considerable amount of extra work.

|

Data type

|

Format

|

Domain

|

I/U

|

Selection

|

Mandatory

|

Field length

|

Special characters

|

Default

|

|

Input booking

|

H

|

H

|

H

|

M

|

M

|

M

|

L

|

L

|

L

|

|

Booking ID

|

|

-

|

|

|

-

|

|

|

|

-

|

|

Destination

|

|

-

|

|

|

|

|

|

|

-

|

|

Airline

|

|

-

|

|

|

|

|

|

|

-

|

|

Date of travel

|

|

|

|

|

-

|

|

-

|

|

|

|

No. of passengers

|

|

-

|

|

|

-

|

|

|

|

|

|

Class

|

|

-

|

|

|

|

|

|

|

|

|

… …

|

|

|

|

|

|

|

|

|

|

Table 1:Matrix of fields versus attribute checks for SYN.

During test execution, the same matrix can be used to indicate which test situations are tested and if necessary

which findings have been made.

2 - Creating logical test cases

The test situations from step 1 at once form the logical test cases.

3 - Creating physical test cases

If desired, physical test cases and a test script can be created. This is particularly useful if the testing

is to be carried out by inexperienced testers.

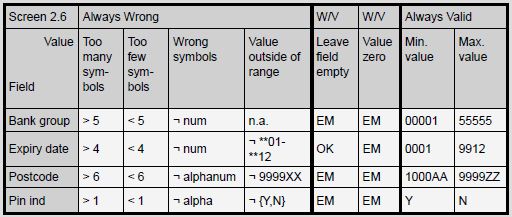

In table 2 is an example of physical test cases. It shows 3 groups of test situations: the “always wrong” situations;

situations that can sometimes be wrong and sometimes be right; the “always valid” situations.

The abbreviation “EM” stands for “error message”. The symbol “¬” stands for “not”.

Table 2: Example of physical test cases for SYN.

However, the description of each test situation usually provides sufficient information for executing the test. In that

case, it is advisable during the test execution somehow to establish in concrete terms what has been tested, for

purposes of reproducibility of the test. In the cases in which a defect is found, this is even a condition.

Tips - The syntactic test is ideally suited to automation. Besides the advantage of

reproducibility, the automated test in itself offers detailed documentation on what exactly has been tested and which

situations have been right or wrong.

4 - Establishing the starting point

No special requirements are normally set as regards the starting point. An exception to this is perhaps the testing of

the syntax and layout of output such as lists and reports. This can prevent a particular list with a particular value

in a particular field from only being produced after a complex and lengthy series of actions.

Tips - Combine the syntactic tests of lists and reports as far as possible with the

functional tests (that produce the necessary variations of lists and reports), or do them at the end of such tests, so

that the database is already maximally filled with lists and reports.

|